Apparently, LLMs are really bad at playing chess

- Not all LLMs are equal: GPT-3.5-turbo-instruct stands out as the most capable chess-playing model tested.

- Fine-tuning is crucial: Instruction tuning and targeted dataset exposure dramatically enhance performance in specific domains.

- Chess as a benchmark: The experiment highlights chess as a valuable benchmark for evaluating LLM capabilities and refining AI systems.

Can AI language models play chess? That question sparked a recent investigation into how well large language models (LLMs) handle chess tasks, revealing unexpected insights about their strengths, weaknesses, and training methodologies.

While some models floundered against even the simplest chess engines, others—like OpenAI’s GPT-3.5-turbo-instruct—showed surprising potential, pointing to intriguing implications for AI development.

Testing LLMs against chess enginesResearchers tested various LLMs by asking them to play chess as grandmasters, providing game states in algebraic notation. Initial excitement centered on whether LLMs, trained on vast text corpora, could leverage embedded chess knowledge to predict moves effectively.

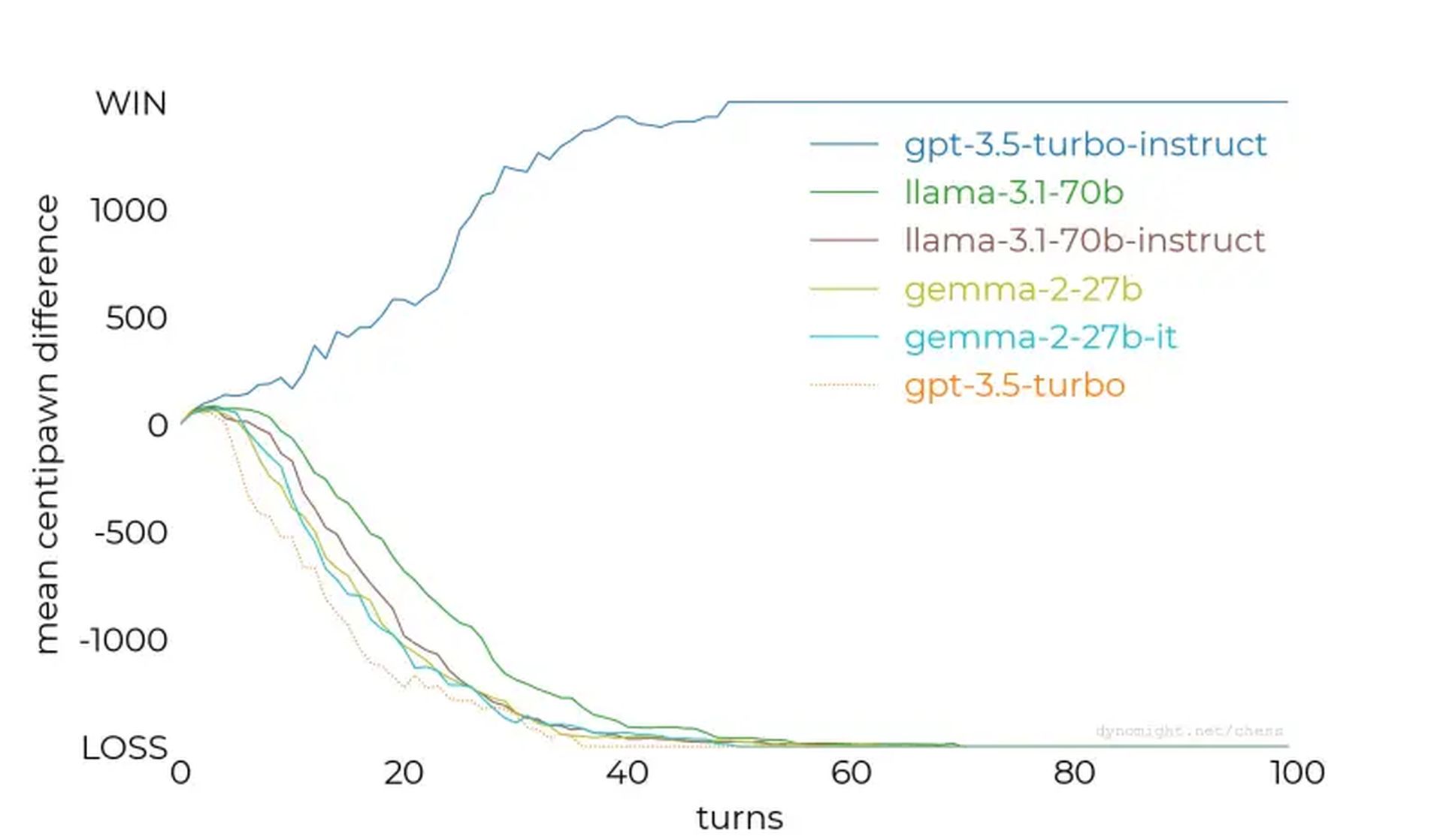

However, results showed that not all LLMs are created equal.

The study began with smaller models like llama-3.2-3b, which has 3 billion parameters. After 50 games against Stockfish’s lowest difficulty setting, the model lost every match, failing to protect its pieces or maintain a favorable board position.

Testing escalated to larger models, such as llama-3.1-70b and its instruction-tuned variant, but they also struggled, showing only slight improvements. Other models, including Qwen-2.5-72b and command-r-v01, continued the trend, revealing a general inability to grasp even basic chess strategies.

Smaller LLMs, like llama-3.2-3b, struggled with basic chess strategies, losing consistently to even beginner-level engines (Image credit)

GPT-3.5-turbo-instruct was the unexpected winner

Smaller LLMs, like llama-3.2-3b, struggled with basic chess strategies, losing consistently to even beginner-level engines (Image credit)

GPT-3.5-turbo-instruct was the unexpected winner

The turning point came with GPT-3.5-turbo-instruct, which excelled against Stockfish—even when the engine’s difficulty level was increased. Unlike chat-oriented counterparts like gpt-3.5-turbo and gpt-4o, the instruct-tuned model consistently produced winning moves.

Why do some models excel while others fail?Key findings from the research offered valuable insights:

- Instruction tuning matters: Models like GPT-3.5-turbo-instruct benefited from human feedback fine-tuning, which improved their ability to process structured tasks like chess.

- Dataset exposure: There’s speculation that instruct models may have been exposed to a richer dataset of chess games, granting them superior strategic reasoning.

- Tokenization challenges: Small nuances, like incorrect spaces in prompts, disrupted performance, highlighting the sensitivity of LLMs to input formatting.

- Competing data influences: Training LLMs on diverse datasets may dilute their ability to excel at specialized tasks, such as chess, unless counterbalanced with targeted fine-tuning.

As AI continues to improve, these lessons will inform strategies for improving model performance across disciplines. Whether it’s chess, natural language understanding, or other intricate tasks, understanding how to train and tune AI is essential for unlocking its full potential.

Featured image credit: Piotr Makowski/Unsplash