Tried out CogVideoX, another open-source text-to-video AI

Tsinghua University and Zhipu AI have introduced CogVideoX in collaboration, an open-source text-to-video model poised to challenge AI heavyweights like Runway, Luma AI, and Pika Labs. Detailed in a recent arXiv publication, this innovation offers advanced video generation capabilities to developers worldwide.

CogVideoX: New open-source text-to-video AI tool“We introduce CogVideoX, large-scale diffusion transformer models designed for generating videos based on text prompts. To efficently model video data, we propose to levearge a 3D Variational Autoencoder (VAE) to compress videos along both spatial and temporal dimensions. To improve the text-video alignment, we propose an expert transformer with the expert adaptive LayerNorm to facilitate the deep fusion between the two modalities. By employing a progressive training technique, CogVideoX is adept at producing coherent, long-duration videos characterized by significant motions,” the paper reads.

Tsinghua University has been heavily involved in AI research, with several noteworthy projects under its belt.Recently, they collaborated on OpenVoice, an open-source voice cloning platform developed alongside MIT and MyShell, and now they’ve introduced CogVideoX-5B, a text-to-video model. They’ve also partnered with Shengshu Technology to launch Vidu AI, a tool designed to simplify video creation using AI.

CogVideoX can create high-quality, coherent videos up to six seconds long from simple text prompts.

The standout model, CogVideoX-5B, features 5 billion parameters, producing videos at a 720×480 resolution and 8 frames per second. While these specs may not rival the latest proprietary systems, the true breakthrough lies in CogVideoX’s open-source approach.

Open-source models are revolutionizing the field by releasing their code and model weights to the public, the Tsinghua team has effectively democratized a technology that was once the domain of well-funded tech giants. This move is expected to accelerate advancements in AI-generated video by tapping into the collective expertise of the global developer community.

The researchers achieved CogVideoX’s impressive results through several key innovations, including a 3D Variational Autoencoder for efficient video compression and an “expert transformer” designed to enhance text-video alignment.

“To improve the alignment between videos and texts, we propose an expert Transformer with expert adaptive LayerNorm to facilitate the fusion between the two modalities,” the paper explains. This breakthrough enables more precise interpretation of text prompts and more accurate video generation.

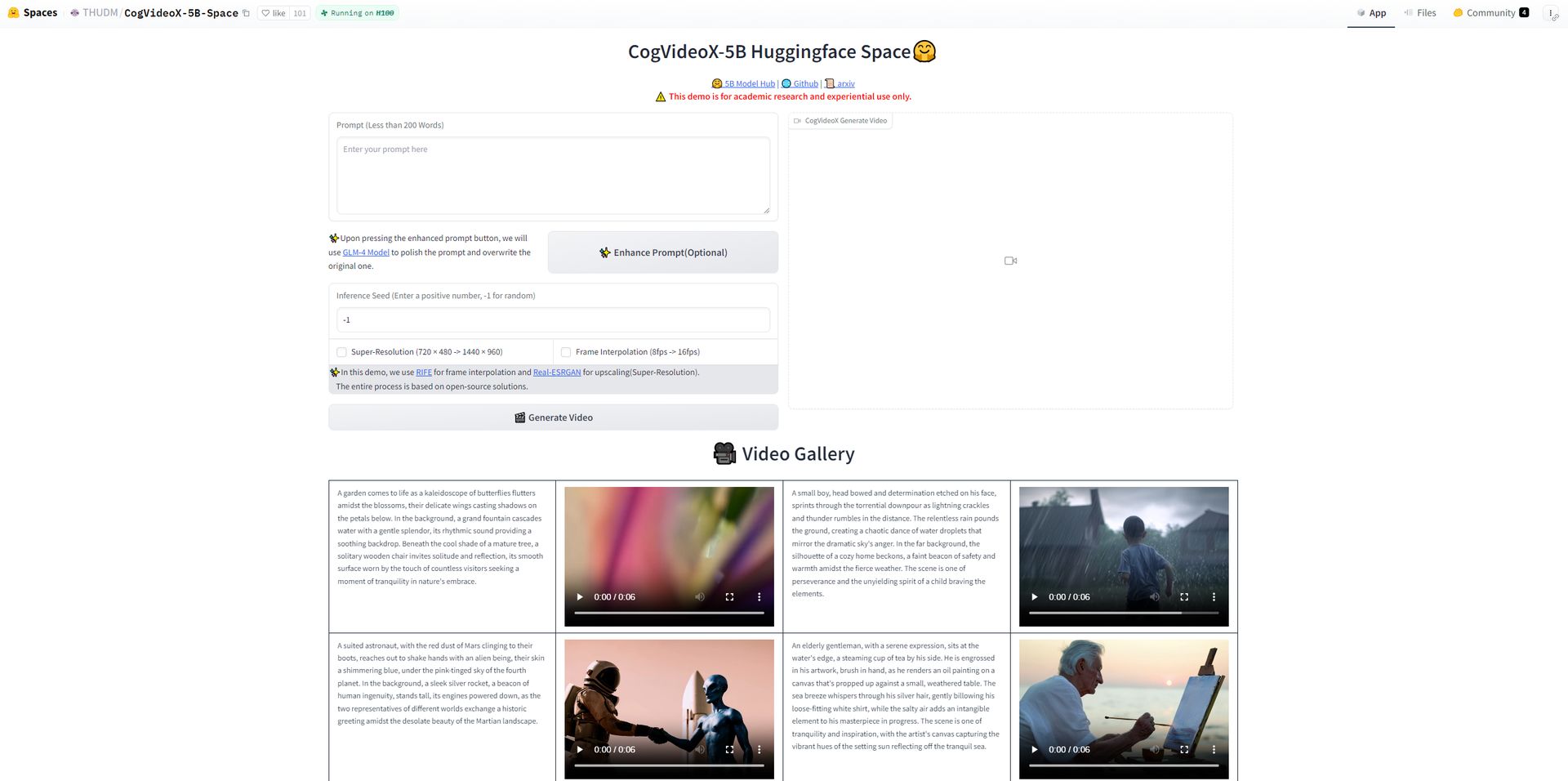

How to try CogVideoX?- Start by heading over to the HuggingFace platform where the CogVideoX-5B open-source video generation tool is available for testing.

Step 1

Step 1

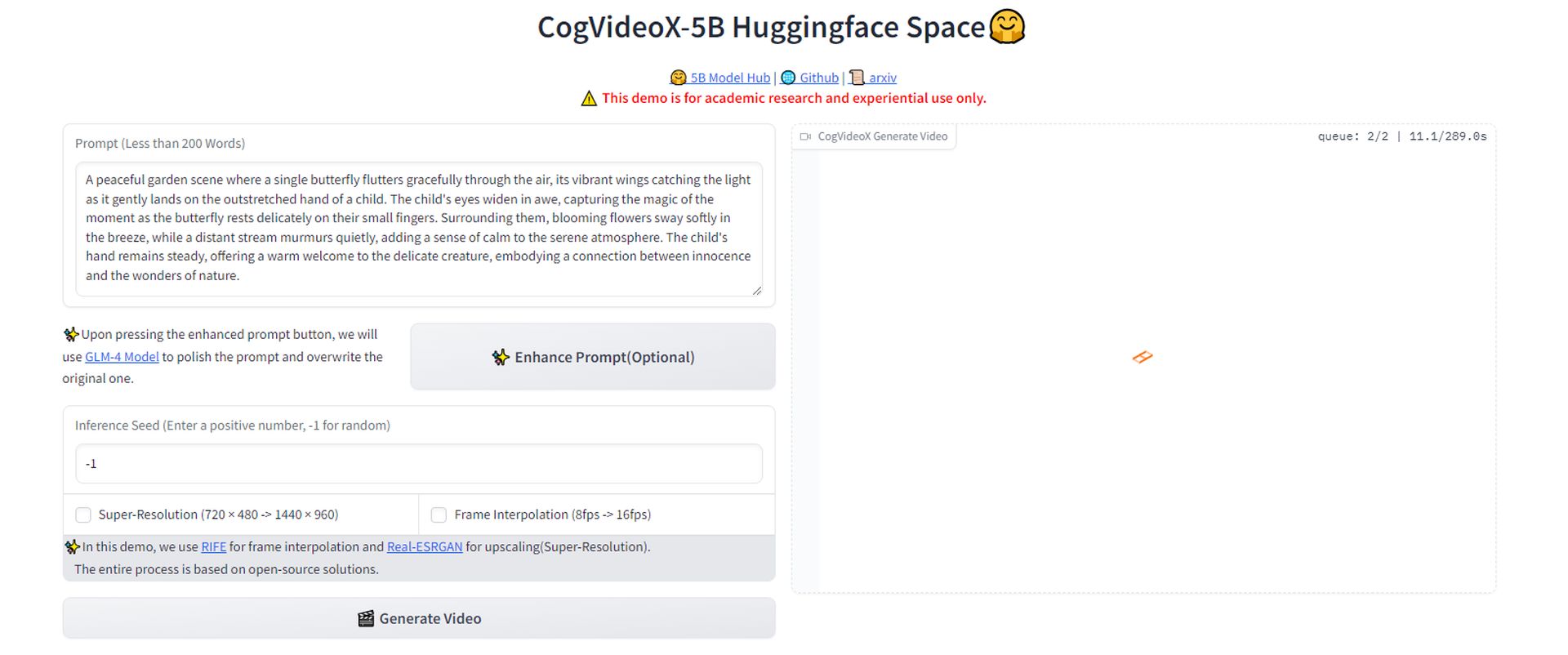

- Craft a descriptive prompt for the video you want to generate. For example, we used:

- Once your prompt is ready, click the button to generate the video. You’ll need to wait a moment while the tool processes your request and creates the video based on your description.

Step 2

Step 2

- After the video is generated, you can download it directly from the platform. This allows you to view the result of your prompt and see how accurately the tool interpreted your description.

Step 3

Step 3

- Check out the video. While the result may not be mind-blowing, it’s important to note that these types of tools are rapidly improving. Just as we saw with the evolution of ChatGPT, a significant breakthrough in AI-generated video is likely on the horizon.

Tried it out—while it’s not mind-blowing yet, these tools are popping up everywhere. Expecting a breakthrough soon, just like we saw with ChatGPT. pic.twitter.com/53xYz6lBLf

— Kerem Gülen (@kgulenn) August 28, 2024

We’re going to see more and more deepfakesYet, the broad availability of such powerful technology doesn’t come without its dangers. The potential for misuse, particularly in crafting deepfakes or misleading content, is a serious issue that the AI community must confront. The researchers themselves recognize these ethical concerns, urging for the technology to be used responsibly.

As AI-generated video becomes increasingly accessible and advanced, we’re venturing into unknown territory in digital content creation. The launch of CogVideoX could represent a very key moment, potentially redistributing power from the big players in the field to a more open, decentralized model of AI development.

The true effects of this democratization are still uncertain. Will it create a new wave of creativity and innovation, or will it worsen the existing problems of misinformation and digital manipulation?

Featured image credit: Kerem Gülen/Midjourney