Les Ministraux: Ministral 3B and 8B models bring GenAI to the edge

In a world dominated by bloated AI models that live in the cloud, Mistral AI is flipping the script. The French startup just unleashed two new models—Ministral 3B and 8B—that are designed to run on edge devices.

Les Ministraux: Ministral 3B and 8BMistral’s new offerings, dubbed “Les Ministraux,” might sound like a French art-house film, but these models are poised to shake up the AI world. With just 3 billion and 8 billion parameters respectively, the Ministraux family is all about efficiency. Forget those resource-hogging AI models that require a data center to function.

“Our most innovative customers and partners have increasingly been asking for local, privacy-first inference for critical applications,” Mistral explained.

Context length like never beforeHere’s where it gets really spicy: both the 3B and 8B models can handle a context window of 128,000 tokens. That’s the equivalent of a 50-page book. For comparison, even OpenAI’s GPT-4 Turbo caps out around the same token count, and that’s no small feat.

With this kind of capacity, the Ministraux models don’t just outperform their predecessor, the Mistral 7B—they’re also eating Google’s Gemma 2 2B and Meta’s Llama models for breakfast.

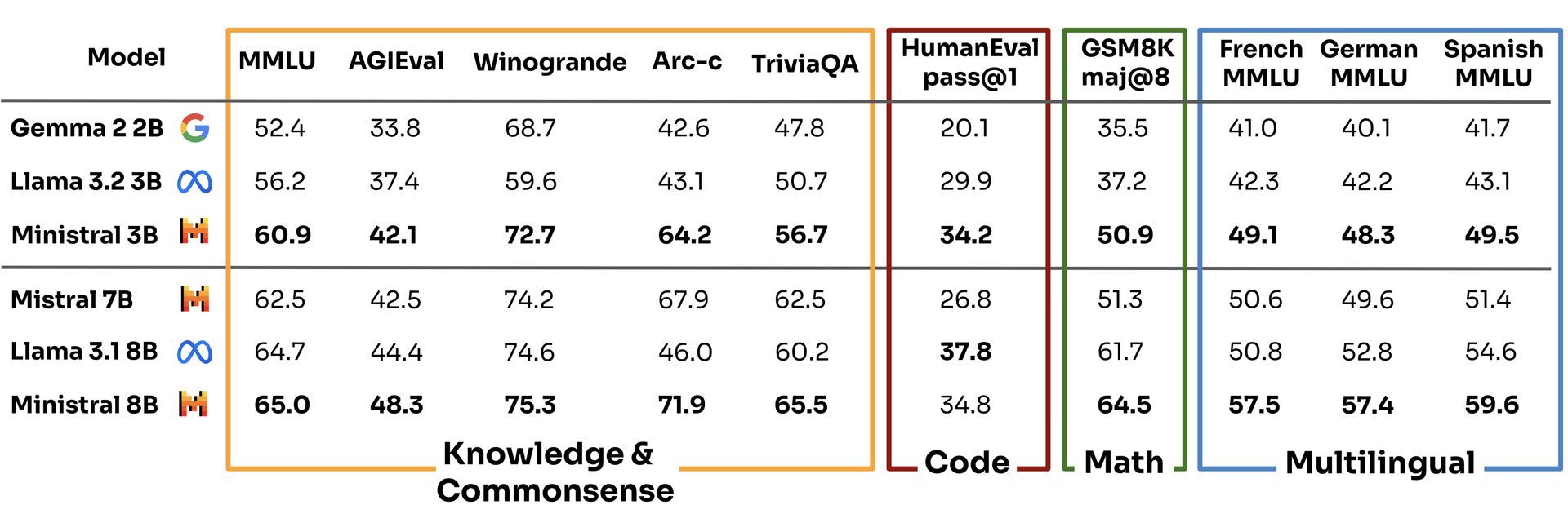

Ministral 3B and 8B models compared to other models

Ministral 3B and 8B models compared to other models

According to Mistral’s own benchmarks, the 3B model scored 60.9 in the Multi-task Language Understanding evaluation, leaving competitors like Llama 3.2 3B and Gemma 2 2B trailing at 56.2 and 52.4, respectively.

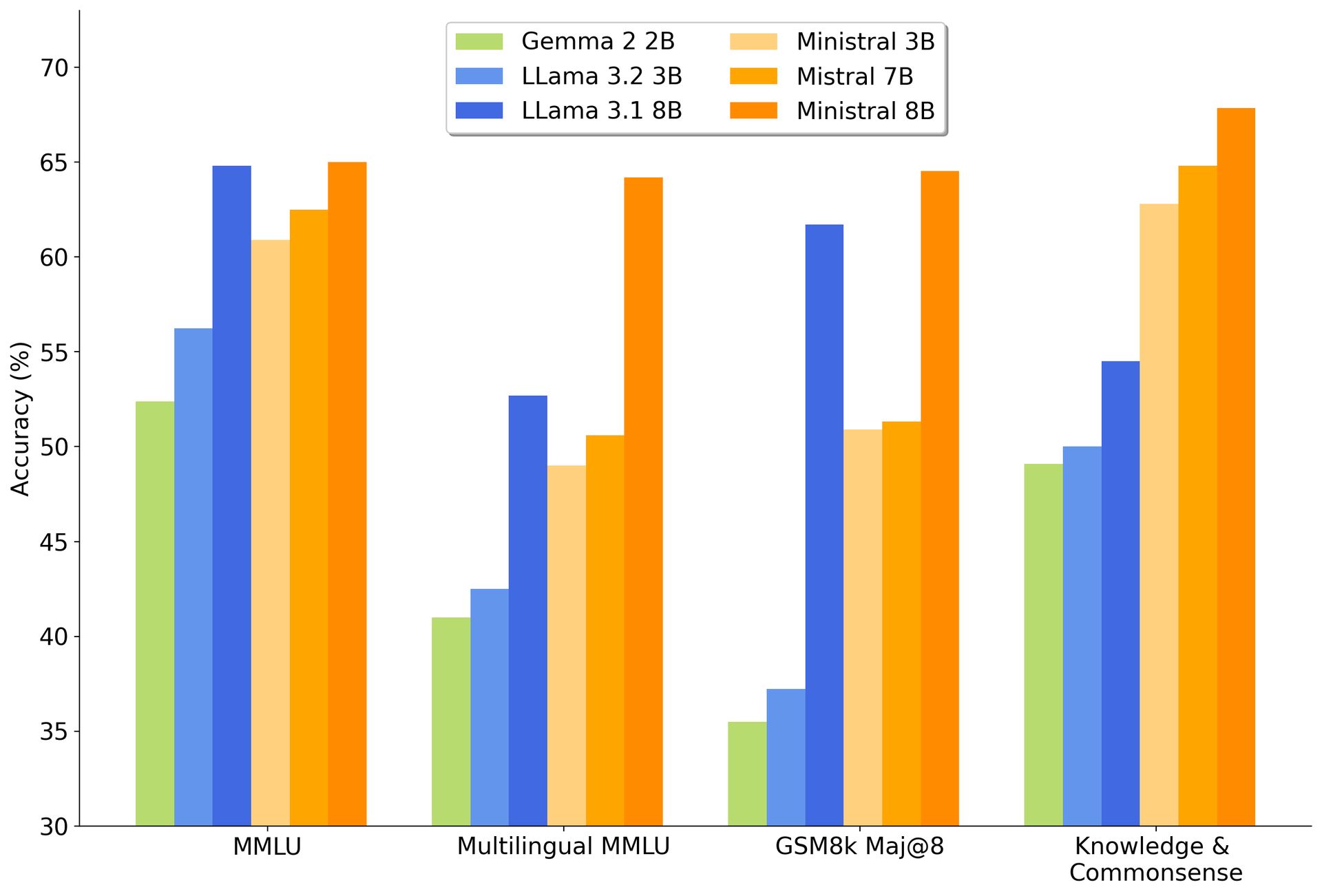

Ministral 3B and 8B benchmarks

Ministral 3B and 8B benchmarks

Not bad for a “smaller” model, right?

While everyone else in the AI world is scrambling to make bigger, badder models that guzzle energy, Mistral is playing a different game. By running on local devices, Les Ministraux cut out the need for massive cloud servers and, in doing so, offer a much more eco-friendly option. It’s a move that aligns perfectly with the increasing pressure on tech companies to be more environmentally conscious. AI might be the future, but nobody wants that future to come at the cost of the planet.

There’s also the privacy angle. With everything running locally, your data stays on your device, which is a huge win for industries like healthcare and finance that are increasingly under the microscope for how they handle sensitive information. You can think of it as AI without the snooping—a refreshing change in a world where every app seems to be collecting more data than the NSA.

Mistral’s master plan: Build, disrupt, repeatBut let’s not kid ourselves—Mistral isn’t just doing this for the greater good. The company, co-founded by alumni from Meta and Google’s DeepMind, is in the business of making waves. They’ve already raised $640 million in venture capital and are laser-focused on building AI models that not only rival the likes of OpenAI’s GPT-4 and Anthropic’s Claude but also turn a profit in the process.

And while making money in the generative AI space is about as easy as winning the lottery, Mistral isn’t backing down. In fact, they started generating revenue this past summer, which is more than you can say for a lot of their competitors.

By offering Ministral 8B for research purposes and making both models available through their cloud platform, La Platforme, Mistral is positioning itself as the cool kid on the AI block—open enough to attract developers, but smart enough to monetize its tech through strategic partnerships. It’s a hybrid approach that mirrors what open-source giants like Red Hat did in the Linux world, fostering community while keeping the cash registers ringing.

Image credits: Mistral