Kyutai introduces Moshi Chat, an AI that can both listen and speak in real time

Kyutai, a non-profit laboratory dedicated to advancing open research in artificial intelligence (AI), has made significant strides with its latest innovation, Moshi Chat. This cutting-edge real-time native multimodal foundation model represents a remarkable achievement in AI technology. Kyutai’s introduction of Moshi Chat has garnered attention for its impressive capabilities, particularly in the realms of listening and speaking simultaneously. Unlike traditional AI models, Moshi Chat is designed to understand and express emotions, making interactions with it more natural and engaging.

With its unique features and open-source availability, Moshi Chat stands out as a pioneer in the development of AI.

Moshi Chat’s development is a testament to Kyutai’s commitment to transparency and collaborative innovation. The model’s ability to handle two audio streams simultaneously—listening and speaking in real time—sets it apart from other AI models.

This capability is underpinned by a robust joint pre-training process on a combination of text and audio data, utilizing synthetic text data from Helium, a 7-billion-parameter language model developed by Kyutai. Such advancements in AI technology are the result of rigorous research and fine-tuning, aimed at achieving seamless and efficient performance.

Kyutai Moshi Chat’s technologyMoshi Chat’s standout feature is its real-time interaction capability, which allows it to listen and respond simultaneously. This is achieved through joint pre-training on a mix of text and audio data, ensuring that the model can maintain a smooth flow of textual and auditory information. The foundation of Moshi Chat’s speech processing abilities is the Helium model, a 7-billion-parameter language model that serves as the backbone for this innovative technology.

According to the Kyutai Moshi Chat’s keynote on YouTube, the fine-tuning process for Moshi Chat involved an extensive dataset of 100,000 “oral-style” synthetic conversations. These conversations were converted using Text-to-Speech (TTS) technology, similar to Murf AI, allowing the model to generate and understand speech with remarkable accuracy. The TTS engine, supporting 70 different emotions and styles, was fine-tuned using 20 hours of audio recorded by licensed voice talent. This meticulous approach to training has resulted in a model that not only understands spoken language but also conveys emotions and nuances, making interactions more natural and engaging.

Kyutai’s commitment to responsible AI use is evident in their incorporation of watermarking to detect AI-generated audio. This feature, still in progress, underscores the importance of ethical considerations in AI development. Additionally, the decision to release Moshi Chat as an open-source project highlights Kyutai’s dedication to fostering a collaborative environment within the AI community.

Moshi Chat understands and expresses emotions, making interactions more natural (Image credit)

Training and fine-tuning process of Moshi AI

Moshi Chat understands and expresses emotions, making interactions more natural (Image credit)

Training and fine-tuning process of Moshi AI

The development of Moshi Chat involved a rigorous training and fine-tuning process to ensure its high level of performance. The model was trained from scratch using the Helium 7B base text language model, which was then jointly trained with text and audio codecs.

The speech codec, based on Kyutai’s in-house Mimi model, boasts a 300x compression factor, which is instrumental in preserving the quality of the audio while reducing the data size.

The fine-tuning process for Moshi Chat involved annotating 100,000 highly detailed transcripts with emotion and style. These annotations enable the model to understand and convey a wide range of emotions, making interactions with it more lifelike and engaging. The Text-to-Speech engine, which supports 70 different emotions and styles, was fine-tuned using 20 hours of audio recorded by a licensed voice talent named Alice.

Kyutai’s focus on adaptability is evident in Moshi Chat’s ability to be fine-tuned with less than 30 minutes of audio. This feature allows users to customize the model to suit specific needs, whether for research, language learning, or other applications. The model’s deployment showcases its efficiency and versatility, handling two batch sizes at 24 GB VRAM and supporting multiple backends. Optimizations in inference code, such as enhanced KV caching and prompt caching, are anticipated to further improve Moshi Chat’s performance.

Moshi Chat is built on the Helium language model, a 7-billion-parameter model developed by Kyutai (Image credit)

Technology for everyone from Kyutai Labs

Moshi Chat is built on the Helium language model, a 7-billion-parameter model developed by Kyutai (Image credit)

Technology for everyone from Kyutai Labs

Moshi Chat is not only a technological marvel but also highly accessible. Kyutai has developed a smaller variant of the model that can run on a MacBook or a consumer-sized GPU, making it available to a wider range of users.

The model’s efficiency is further demonstrated by its deployment on platforms like Scaleway and Hugging Face, where it handles two batch sizes at 24 GB VRAM, supporting various backends including CUDA, Metal, and CPU.

The model’s voice, trained on synthetic data generated by a separate TTS model, achieves an impressive end-to-end latency of 200 milliseconds. This low latency is crucial for real-time interactions, allowing Moshi Chat to respond almost instantaneously to user inputs. The combination of advanced training techniques and optimized inference code, developed using Rust, contributes to the model’s superior performance. Enhanced KV caching and prompt caching are also expected to improve the model’s efficiency further.

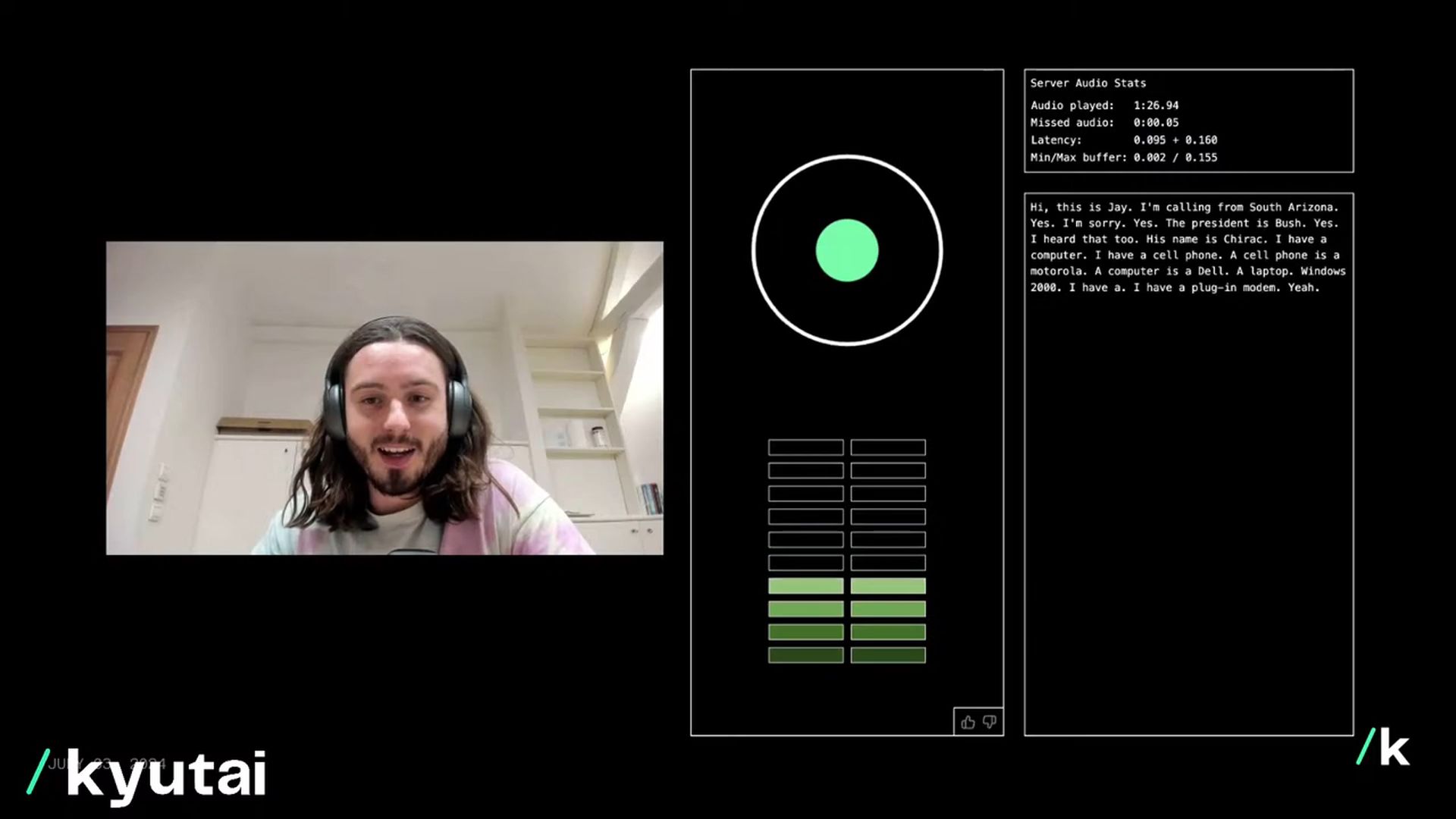

The demo posted by Yann LeCun showcases how well this new AI model works.

A live demo of Moshi from https://t.co/TngVXgSjzX https://t.co/qftjudOq1k

— Yann LeCun (@ylecun) July 3, 2024

Looking ahead, Kyutai has ambitious plans for Moshi Chat. The team intends to release a comprehensive technical report and open model versions, including the inference codebase, the 7B model, the audio codec, and the full optimized stack. Future iterations of Moshi Chat, such as versions 1.1, 1.2, and 2.0, will incorporate user feedback to refine and enhance the model’s capabilities.

Kyutai’s permissive licensing aims to encourage widespread adoption and innovation, ensuring that the benefits of Moshi Chat are accessible to a diverse audience.

How to use Moshi ChatUsers can and are encouraged to try out Moshi Chat online via the Kyutai website. Once there:

- Enter your email

- Click “Join Queue”

- Start talking

Whether discussing everyday topics or exploring more complex subjects, users can engage with Moshi Chat naturally, benefiting from its advanced speech recognition and synthesis capabilities.

Featured image credit: Kyutai/YouTube