How Google’s DataGemma uses RAG to combat AI hallucinations

Google has taken another significant step forward in the race to improve the accuracy and reliability of AI models with the introduction of DataGemma, an innovative approach that combines its Gemma large language models (LLMs) and the Data Commons project. The spotlight here is on a technique called retrieval-augmented generation (RAG), a method that has been gaining traction in enterprises, but now, with DataGemma, Google aims to bring it into the AI mainstream.

At its core, RAG seeks to solve one of the biggest challenges faced by LLMs: the problem of hallucinations. In the world of generative AI, hallucinations refer to instances where the model generates information that sounds plausible but is factually incorrect. This is a common issue in AI systems, especially when they lack reliable grounding in factual data. Google’s goal with DataGemma is to “harness the knowledge of Data Commons to enhance LLM factuality and reasoning,” addressing this issue head-on.

What is RAG?Retrieval-augmented generation is a game changer because it doesn’t rely solely on pre-trained AI models to generate answers. Instead, it retrieves relevant data from an external source before generating a response. This approach allows AI to provide more accurate and contextually relevant answers by pulling real-world data from repositories. In the case of DataGemma, the source of this data is Google’s Data Commons project, a publicly available resource that aggregates statistical data from reputable institutions like the United Nations.

This move by Google to integrate Data Commons with its generative AI models represents the first large-scale cloud-based implementation of RAG. While many enterprises have used RAG to ground their AI models in proprietary data, using a public data resource like Data Commons takes things to a whole new level. It signals Google’s intention to use verifiable, high-quality data to make AI more reliable and useful across a broad range of applications.

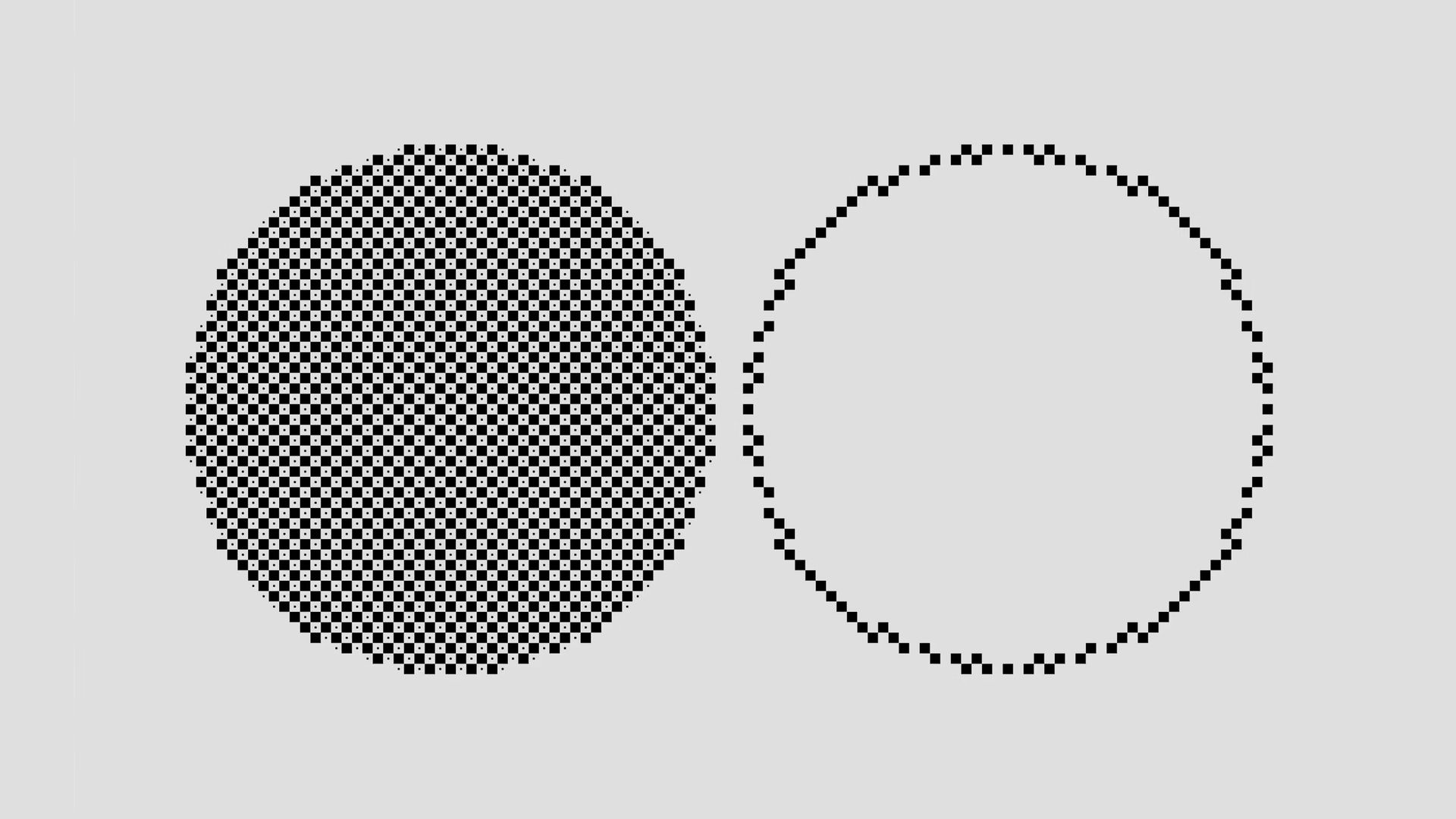

According to Google, DataGemma takes “two distinct approaches” to integrate data retrieval with LLM output (Image credit)

Google’s two-pronged approach

According to Google, DataGemma takes “two distinct approaches” to integrate data retrieval with LLM output (Image credit)

Google’s two-pronged approach

According to Google, DataGemma takes “two distinct approaches” to integrate data retrieval with LLM output. The first method is called retrieval-interleaved generation (RIG). With RIG, the AI fetches specific statistical data to fact-check questions posed in the query prompt. For example, if a user asks, “Has the use of renewables increased in the world?” the system can pull in up-to-date statistics from Data Commons and cite them directly in its response. This not only improves the factual accuracy of the answer but also provides users with concrete sources for the information.

The second method is more in line with the traditional RAG approach. Here, the model retrieves data to generate more comprehensive and detailed responses, citing the sources of the data to create a fuller picture. “DataGemma retrieves relevant contextual information from Data Commons before the model initiates response generation,” Google states. This ensures that the AI has all the necessary facts at hand before it begins generating an answer, greatly reducing the likelihood of hallucinations.

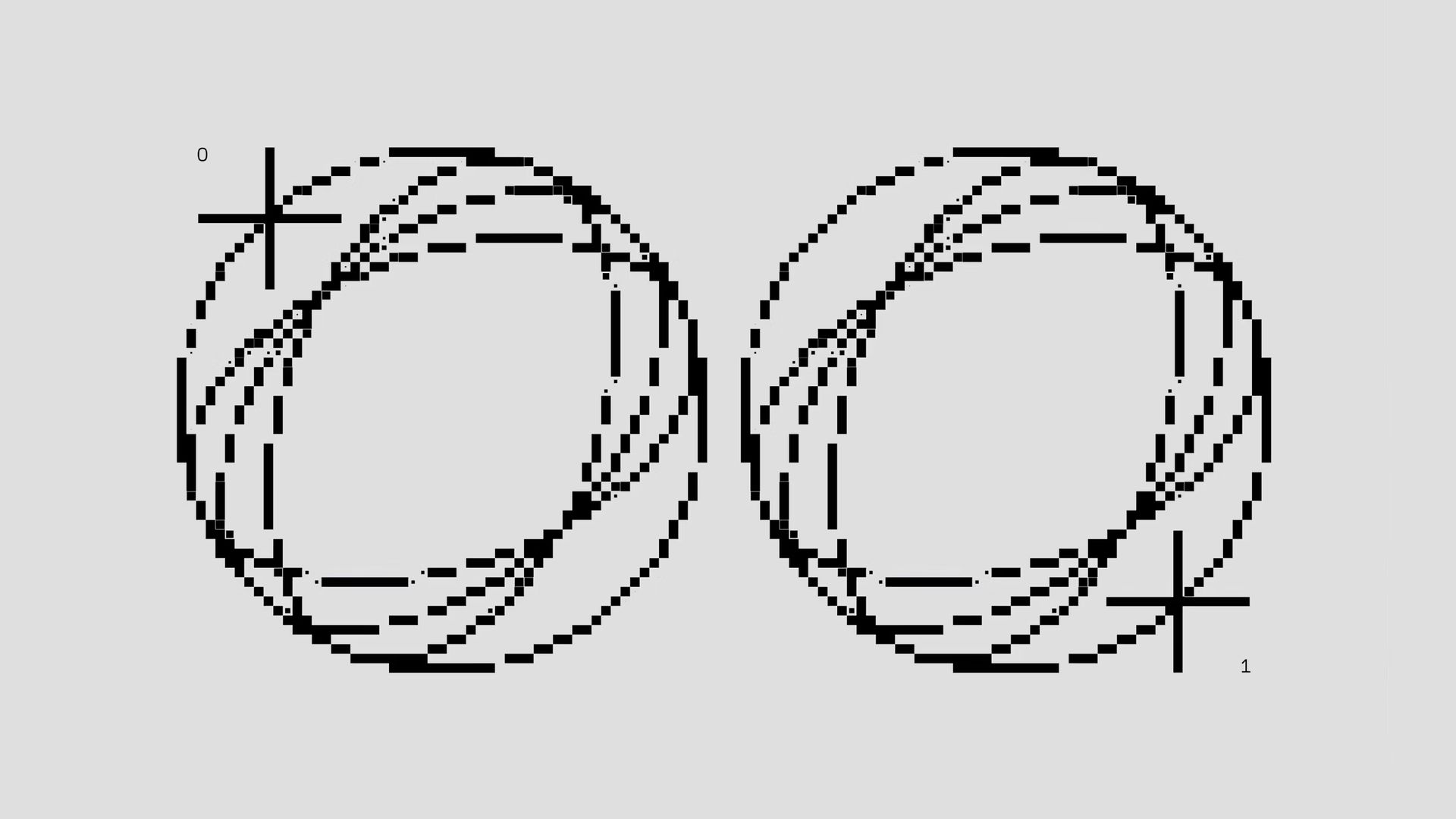

A key feature of DataGemma is the use of Google’s Gemini 1.5 model, which boasts an impressive context window of up to 128,000 tokens. In AI terms, the context window refers to how much information the model can hold in memory while processing a query. The larger the window, the more data the model can take into account when generating a response. Gemini 1.5 can even scale up to a staggering 1 million tokens, allowing it to pull in massive amounts of data from Data Commons and use it to craft detailed, nuanced responses.

This extended context window is critical because it allows DataGemma to “minimize the risk of hallucinations and enhance the accuracy of responses,” according to Google. By holding more relevant information in memory, the model can cross-check its own output with real-world data, ensuring that the answers it provides are not only relevant but also factually grounded.

A key feature of DataGemma is the use of Google’s Gemini 1.5 model (Image credit)

Beyond LLMs

A key feature of DataGemma is the use of Google’s Gemini 1.5 model (Image credit)

Beyond LLMs

While the integration of RAG techniques is exciting on its own, DataGemma also represents a broader shift in the AI landscape. It’s no longer just about large language models generating text or answering questions based on what they’ve been trained on. The future of AI lies in its ability to integrate with real-time data sources, ensuring that its outputs are as accurate and up-to-date as possible.

Google is not alone in this pursuit. Just last week, OpenAI unveiled its “Strawberry” project, which takes a different approach to improving AI reasoning. Strawberry uses a method known as “chain of thought”, where the AI spells out the steps or factors it uses to arrive at a prediction or conclusion. While different from RAG, the goal is similar: make AI more transparent, reliable, and useful by providing insights into the reasoning behind its answers.

What’s next for DataGemma?For now, DataGemma remains a work in progress. Google acknowledges that more testing and development are needed before the system can be made widely available to the public. However, early results are promising. Google claims that both the RIG and RAG approaches have led to improvements in output quality, with “fewer hallucinations for use cases across research, decision-making, or simply satisfying curiosity.”

It’s clear that Google, along with other leading AI companies, is moving beyond the basic capabilities of large language models. The future of AI lies in its ability to integrate with external data sources, whether they be public databases like Data Commons or proprietary corporate data. By doing so, AI can move beyond its limitations and become a more powerful tool for decision-making, research, and exploration.

Featured image credit: Google