Google announces stronger and enhanced Gemini models

Google has once again upped the ante for artificial intelligence with its recent announcement of improved versions of its Gemini AI models.

As the tech giant accelerates toward the release of Gemini 2.0, the company is making waves with the introduction of the Gemini 1.5 Flash-8B, an enhanced variant of the existing Gemini 1.5 Flash, and a more robust version of the Gemini 1.5 Pro.

These updates, according to Google, represent significant strides in performance, particularly in areas like coding, complex problem-solving, and the ability to handle extensive data inputs.

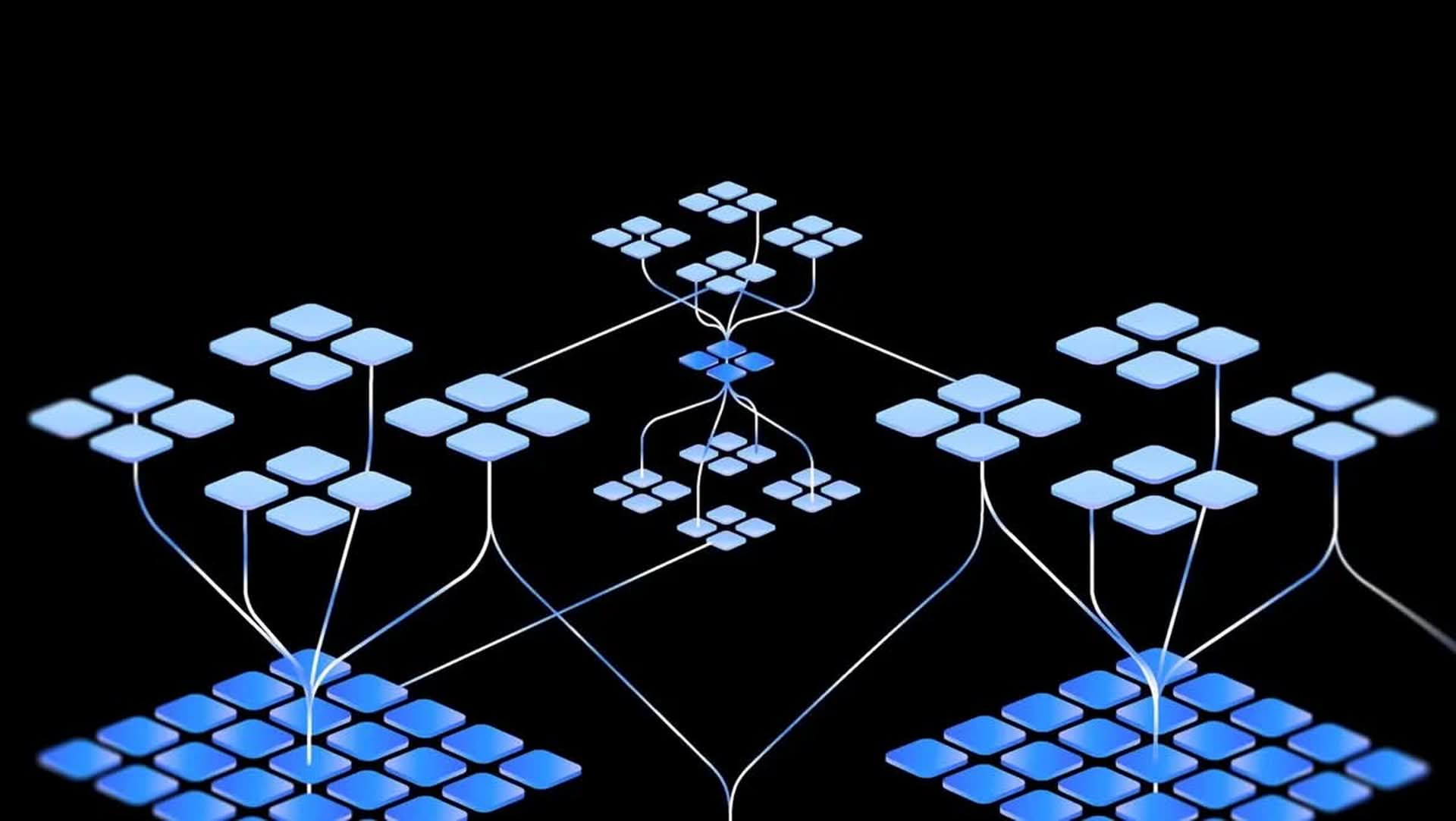

Gemini’s evolutionThe latest iterations of the Gemini models are not just incremental updates but reflect Google’s strategy to lead the next wave of AI innovation. The Gemini 1.5 family, first introduced earlier this year, was designed with the capacity to manage long contexts and process multimodal inputs, such as documents, video, and audio, over large token sequences. This capability alone set a new standard for how AI can be applied in various domains, from research and development to practical applications in coding and content generation.

With the introduction of the Gemini 1.5 Flash-8B, Google has provided a more compact yet powerful variant that retains the core strengths of its predecessor. This model is tailored for efficiency without sacrificing the ability to process and reason over fine-grained information. It’s a move that aligns with the growing demand for AI models that can be deployed across a range of devices and platforms without the heavy computational costs traditionally associated with large language models (LLMs).

Today, we are rolling out three experimental models:

– A new smaller variant, Gemini 1.5 Flash-8B

– A stronger Gemini 1.5 Pro model (better on coding & complex prompts)

– A significantly improved Gemini 1.5 Flash model

Try them on https://t.co/fBrh6UGKz7, details in