ChatGPT Advanced Voice mode speaks up

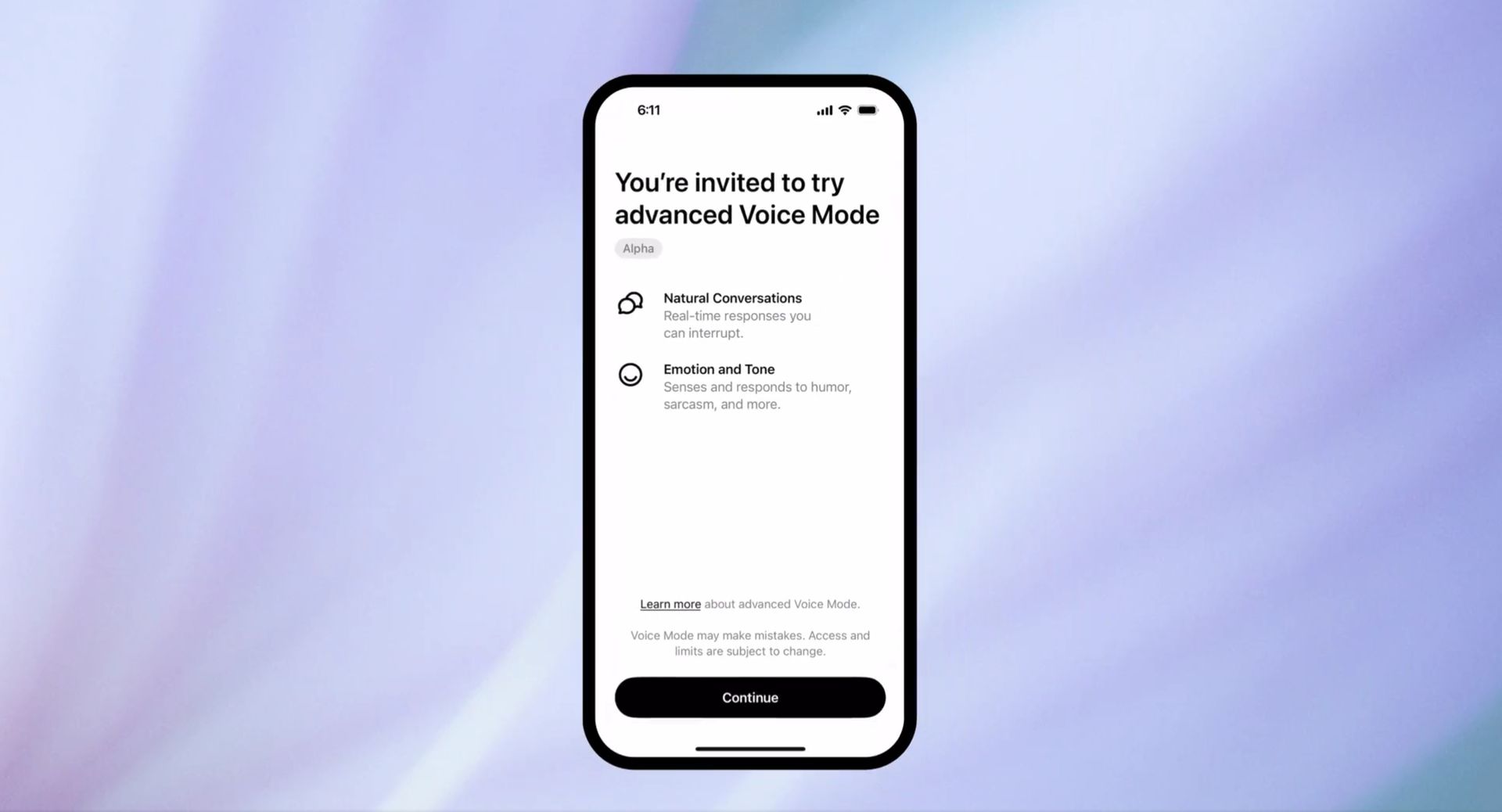

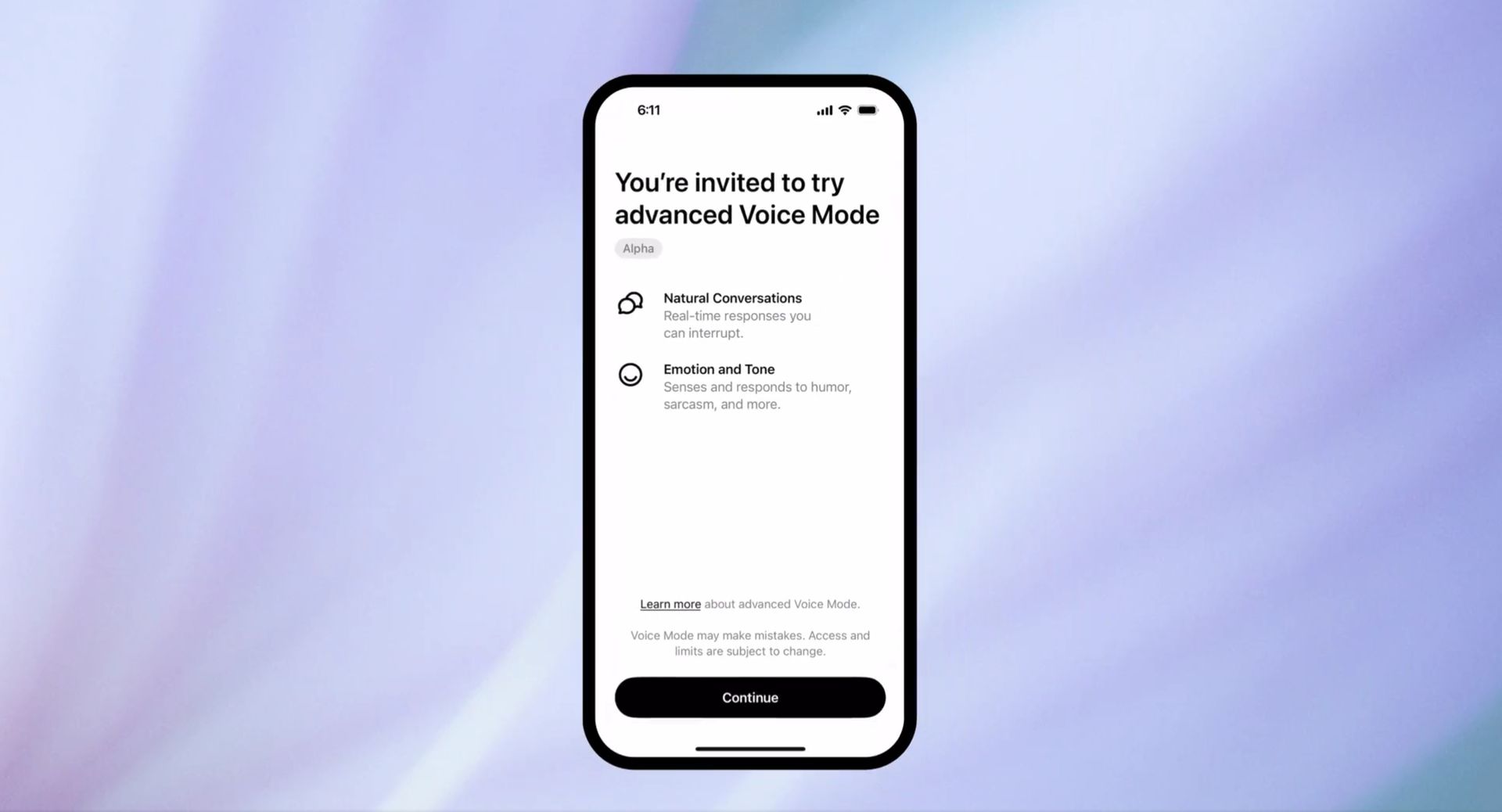

ChatGPT Advanced Voice Mode has arrived, bringing a new dimension to conversational AI.

OpenAI’s latest feature, following the announcement and rolling out of the GPT 4o voice mode, allows users to interact with the chatbot using their voice, creating a more natural and engaging experience.

Let’s explore the ins and outs of OpenAI‘s ChatGPT Advanced Voice Mode, how it works, and what users can expect from this innovative technology.

We’re starting to roll out advanced Voice Mode to a small group of ChatGPT Plus users. Advanced Voice Mode offers more natural, real-time conversations, allows you to interrupt anytime, and senses and responds to your emotions. pic.twitter.com/64O94EhhXK

— OpenAI (@OpenAI) July 30, 2024

What is ChatGPT Advanced Voice Mode?ChatGPT Advanced Voice Mode transforms the way users interact with the AI assistant. Instead of typing queries, users can now speak directly to ChatGPT and receive audio responses.

OpenAI began rolling out ChatGPT Advanced Voice Mode on Tuesday, initially offering it to a select group of ChatGPT Plus subscribers.

The company plans to expand access to all ChatGPT Plus users in the fall of 2024. This gradual rollout allows OpenAI to monitor usage closely and make any necessary adjustments before a wider release.

How ChatGPT Advanced Voice Mode works its magic?ChatGPT Advanced Voice Mode uses a sophisticated system to process audio input and generate spoken responses. Unlike the previous voice feature, which relied on separate models for speech-to-text, text processing, and text-to-speech conversion, the new advanced mode integrates these functions into a single, multimodal model called GPT-4o.

This integrated approach results in faster, more natural conversations with reduced latency. The system can process audio input, understand the context, and generate appropriate responses in a seamless manner.

Some early impressions of the ChatGPT Advanced Voice Mode:

It’s very fast, there’s virtually no latency from when you stop speaking to when it responds.

When you ask it to make noises it always has the voice “perform” the noises (with funny results).

It can do accents, but when… pic.twitter.com/vOA8qmqX06

— Cristiano Giardina (@CrisGiardina) July 31, 2024

Additionally, ChatGPT Advanced Voice Mode can detect emotional nuances in the user’s voice, such as sadness or excitement, allowing for more empathetic interactions.

OpenAI has implemented several safety measures to address potential concerns. The company conducted extensive testing with over 100 external evaluators who speak 45 different languages. This diverse group helped identify and address potential issues before the public release.

How to talk with ChatGPTTo use ChatGPT Advanced Voice Mode, eligible users will receive an alert in the ChatGPT app, followed by an email with detailed instructions. Once activated, users can start voice conversations with ChatGPT through their device’s microphone.

The system offers four preset voices for ChatGPT’s responses:

- Juniper

- Breeze

- Cove

- Ember

These voices were created in collaboration with paid voice actors to ensure high-quality and natural-sounding audio output. It’s important to note that ChatGPT cannot impersonate specific individuals or public figures, as OpenAI has implemented measures to prevent such misuse.

And no, ChatGPT Sky voice was not added to ChatGPT Advanced Voice Mode for obvious reasons, as we have explained in the video below.

Users can engage in various types of conversations, from asking questions and seeking advice to brainstorming ideas or practicing language skills. The voice interaction adds a new layer of convenience and accessibility, especially for those who prefer speaking over typing.

The Sky voice was left outside of the new ChatGPT Advanced Voice Mode (Image credit)

How about the voices of future?

The Sky voice was left outside of the new ChatGPT Advanced Voice Mode (Image credit)

How about the voices of future?

As ChatGPT Advanced Voice Mode continues to develop, it’s likely to have a significant impact on how people interact with AI assistants. The technology opens up new possibilities for accessibility, education, and productivity applications.

However, it’s worth noting that some features demonstrated in earlier previews, such as video and screen-sharing capabilities, are not included in the current release. OpenAI has stated that these additional functionalities will be launched at a later date, giving users something to look forward to in future updates.

The introduction of ChatGPT Advanced Voice Mode raises questions about the future of AI regulation. OpenAI has recently endorsed several U.S. Senate bills related to AI development and education. These endorsements suggest that the company is taking an active role in shaping the regulatory landscape for AI technologies.

One of the endorsed bills, the Future of AI Innovation Act, would establish the United States AI Safety Institute as a federal body responsible for setting standards and guidelines for AI models. This move indicates OpenAI’s commitment to working with government agencies to ensure the safe and responsible development of AI technologies.

As ChatGPT Advanced Voice Mode becomes more widely available, it will be interesting to see how users adapt to this new form of interaction and what creative applications emerge. The technology has the potential to change the way we communicate with AI assistants, making them more accessible and intuitive for a broader range of users.

Featured image credit: OpenAI/X