Are AI generators training on real people’s images? Legal experts say it may be a problem

If you’re like any other AI aficionado, you’ve probably been testing all the latest image generators on the market. You may have noticed a perceptible change over the past few months, particularly in how hyper-realistic these images have become—almost eerily so. While major tech firms like OpenAI, Meta, and Microsoft face multiple lawsuits over the use of written content, including cases brought by authors and organizations like The New York Times, less attention has been given to the use of images of actual people.

AI image generators are artificial intelligence tools that can whip up images in seconds based on just a few words. But while they’re great at producing high-definition visuals, they’re also raising big concerns for public figures, whose resemblances are being used in ways they might not have agreed to.

At ReadWrite, we’ve certainly noticed that several AI image generators now produce almost lifelike depictions of celebrities and well-known figures. From politicians like Donald Trump and British Prime Minister Keir Starmer to stars such as Taylor Swift, it’s often not clear how some of these models have been trained.

DALL-E refuses to produce images of well-known people. Credit: OpenAI / ReadWrite

DALL-E refuses to produce images of well-known people. Credit: OpenAI / ReadWrite

For those who have used OpenAI’s DALL-E and attempted to spawn images of popular figures, you may encounter a message like: “I can’t create an image of [insert person here] or any public figure that directly resembles them. However, I can create a generic representation of someone with similar characteristics.” It also states that “generating depictions of public figures is restricted.” So why are some AI image generators coming up with accurate likenesses of people?

Public figures and ‘copyright-sensitive’ AI images on GrokWe came across this while using Elon Musk’s Grok AI platform, which recently became available to non-premium users. When we asked it to construct images of the billionaire himself, along with OpenAI CEO Sam Altman, it somewhat created an uncanny valley effect.

Grok shows its ability to create hyper-realistic images. Credit: Grok / ReadWrite

Grok shows its ability to create hyper-realistic images. Credit: Grok / ReadWrite

That’s not to say they use actual images to train their AI models. ReadWrite reached out to them and has yet to hear back, so we can’t confirm that ourselves. But it makes users question how it’s able to produce such realistic pictures.

‘Very few safeguards’Technology-focused law firm, Munck Wilson Mandala based in the US, has also raised this issue. In a blog post published in August, attorney Charles-Theodore Zerner wrote that the image generator appeared to have “very few safeguards.” Consequently, he warned that the platform was able to create deepfakes, copyrighted characters, and potentially offensive content.

Zerner points out significant concerns regarding Grok 2, stating, “Musk presents this as a win for free speech, but it’s raising alarm bells for legal experts.” Unlike other AI tools, Grok 2 lacks “robust content moderation and copyright protections,” allowing users to make high-quality images of public figures, such as Kamala Harris or Trump, and copyrighted characters like Mickey Mouse, “engaged in questionable activities.”

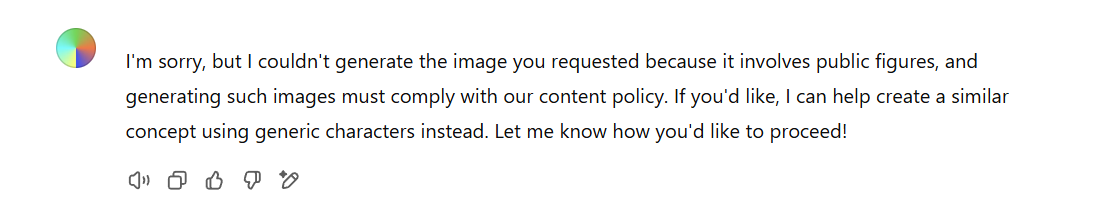

Donald Trump looks lifelike in this AI image. Credit: Grok

Donald Trump looks lifelike in this AI image. Credit: Grok

The legal expert notes that this unrestricted approach distinguishes Grok 2 in the AI landscape, providing users with “unprecedented creative freedom,” but also exposing them to potential legal challenges, including “copyright infringement, defamation, deepfakes,” and the spread of misinformation.

He predicts that there will be “a surge in copyright and personality rights case is likely to follow” as a result. Grok 2’s release also underlines the need for a “clearer legal framework around AI-generated content,” as regulators, including the European Commission, investigate X for possible violations of the Digital Safety Act.

Zerner recommends employers reassess their “Use of AI Policy” given the platform’s potential to create copyrighted derivatives. Business users could inadvertently face litigation, especially if they are aware that the training data might include unlicensed works or that an AI could generate unauthorized derivative works not covered under fair use.

Legal implications of hyper-realistic AI imagesWe also reached out to some legal eagles about this issue and the potential implications of these types of AI images. James Tumbridge, a technology partner at Keystone Law in London, UK, told us that while “AI cannot really create,” there are still several ongoing lawsuits related to this matter.

For instance, Tumbridge highlights that visual media company Getty is suing Stability AI for infringement of the copyright in its images. Only on Tuesday (Jan. 14), Getty lost its bid to represent a group of photographers, being told that individuals had to file their own claims.

xAI's Grok-2 has arrived with deepfakes, nudity, possible copyright infringement, images of weapons and drugs, and much more.

What could possibly go wrong? ¯_(ツ)_/¯

STORY: https://t.co/3irJUH3RZk pic.twitter.com/PBlmGgW0tJ

— Tom Williams (@tom__williams) August 15, 2024

He indicates that “copyright protects images, and use without permission of the owner of an image, is in everyday language; illegal.” Companies that are said to be using AI to concoct images trained on others’ content face potential legal consequences, including injunctions to halt such use and orders to pay compensation.

Tumbridge also notes that despite the consequences, “companies developing AI are getting creative in their defences,” fueling a broader debate about whether they should be granted exemptions under copyright law. He cautions that such exemptions “could destroy the creative industry,” as many artists, photographers, and writers “rely on copyright for their income, and not getting compensated for their work is inherently unfair.”

AI image generators stirring the legal potGrok is not the only AI platform creating photorealistic portrayals. We’ve also tested Ideogram AI, which was formed in 2023 by a team of “world-renowned AI experts.” Based in Toronto, Canada, the company was founded by Mohammad Norouzi, Jonathan Ho, William Chan, and Chitwan Saharia. Some of its members previously led AI projects at Google Brain, UC Berkeley, Carnegie Mellon University (CMU), and the University of Toronto.

Ideogram was one of the early adopters of true-to-life images, obliterating its competitors such as Midjourney and DALL-E. In an official blog post, the firm said: “Trained from scratch like all Ideogram models, Ideogram 1.0 offers state-of-the-art text rendering, unprecedented photorealism, and prompt adherence—and a new feature called Magic Prompt that helps you write detailed prompts for beautiful, creative images.”

Happy to share that Ideogram raised $80 million in series A funding to help people become more creative through generative AI! Thanks to @a16z for leading the round and @Redpoint, @pearvc, @IndexVentures, @svangel for participating!

Ideogram 1.0 will improve considerably soon!

— Mohammad Norouzi (@mo_norouzi) February 29, 2024

In 2024, the company raised an impressive $80 million in a Series A funding round led by Andreessen Horowitz and backed by Redpoint Ventures, Pear VC, and SV Angel.

Donald Trump and Elon Musk are seen on both AI platforms. Credit: Ideogram

Donald Trump and Elon Musk are seen on both AI platforms. Credit: Ideogram

The model is not open-source, so there is limited visibility into its plumbing and no research paper to evaluate. However, there is an AI moderator, so it can interpret prompts and change them into something more suitable. That said, Ideogram AI was able to produce an image of Trump shaking hands with Musk, complete with accurate text, a realistic setting, and instantly recognizable characters. MidJourney outright refused the request, much like DALL-E.

I've only used the free versions, but Ideogram is easier to use and seems more versatile than DALL•E and Midjourney.

The outputs are so good that I feel copyright law has a lot of catching up to do with the AI image generation technology. #ai pic.twitter.com/vwe4LIlr2i

— @andreimartin.bsky.social (@_drei) April 19, 2024

As these tools get more powerful and easier to use, the worries around copyright infringement, misinformation, and deepfakes are only growing. Like its competitors, Ideogram will have to tread carefully through these tricky waters if it wants to earn the trust of enterprise clients and steer clear of regulatory trouble.

ReadWrite has reached out to Ideogram AI and X for comment.

Featured image: Canva

The post Are AI generators training on real people’s images? Legal experts say it may be a problem appeared first on ReadWrite.