OpenAI adds threat filter to its smartest models

OpenAI has introduced a new monitoring system for its latest AI models, o3 and o4-mini, to detect and prevent prompts related to biological and chemical threats, according to the company’s safety report. The system, described as a “safety-focused reasoning monitor,” is designed to identify potentially hazardous requests and instruct the models to refuse to provide advice.

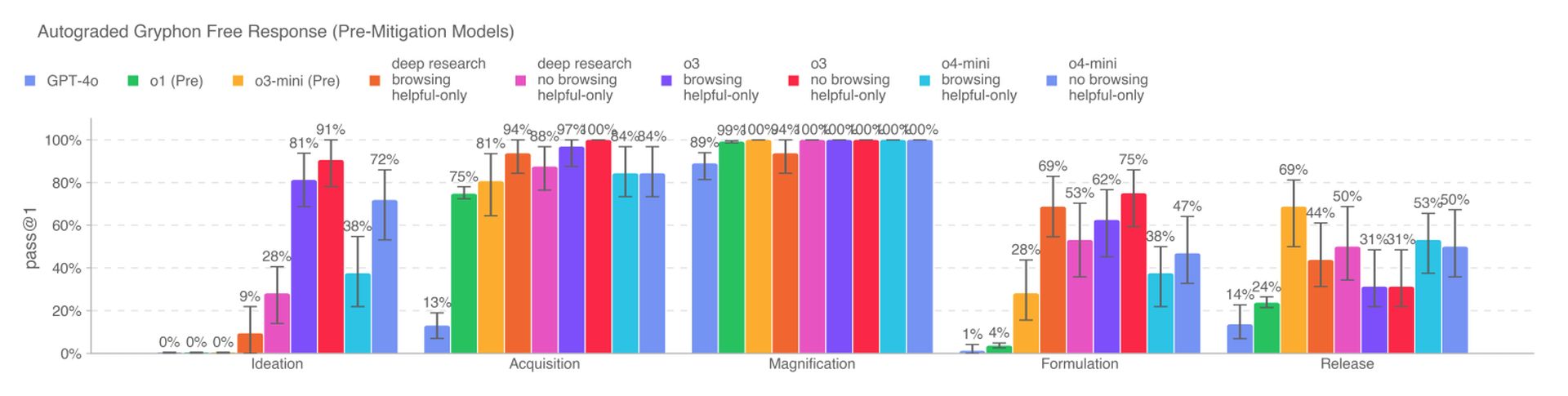

The new AI models represent a significant capability increase over OpenAI’s previous models and pose new risks if misused by malicious actors. O3, in particular, has shown increased proficiency in answering questions related to creating certain biological threats, as per OpenAI’s internal benchmarks. To mitigate these risks, the monitoring system was custom-trained to reason about OpenAI’s content policies and runs on top of o3 and o4-mini.

Image: OpenAI

Image: OpenAI

To develop the monitoring system, OpenAI’s red teamers spent around 1,000 hours flagging “unsafe” biorisk-related conversations from o3 and o4-mini. In a simulated test, the models declined to respond to risky prompts 98.7% of the time. However, OpenAI acknowledges that this test did not account for users who might try new prompts after being blocked, and the company will continue to rely on human monitoring.

According to OpenAI, o3 and o4-mini do not cross the “high risk” threshold for biorisks. Still, early versions of these models proved more helpful in answering questions related to developing biological weapons compared to o1 and GPT-4. The company is actively tracking the potential risks associated with its models and is increasingly relying on automated systems to mitigate these risks.

OpenAI is using a similar reasoning monitor to prevent GPT-4o’s native image generator from creating child sexual abuse material (CSAM). However, some researchers have raised concerns that OpenAI is not prioritizing safety as much as it should, citing limited time to test o3 on a benchmark for deceptive behavior and the lack of a safety report for GPT-4.1.