Google turns Maps into a playground for AI agents and builders

Google is introducing a suite of new AI-powered features for Google Maps, primarily designed to help developers build interactive projects using Maps data and code. The new tools are powered by Google’s Gemini models.

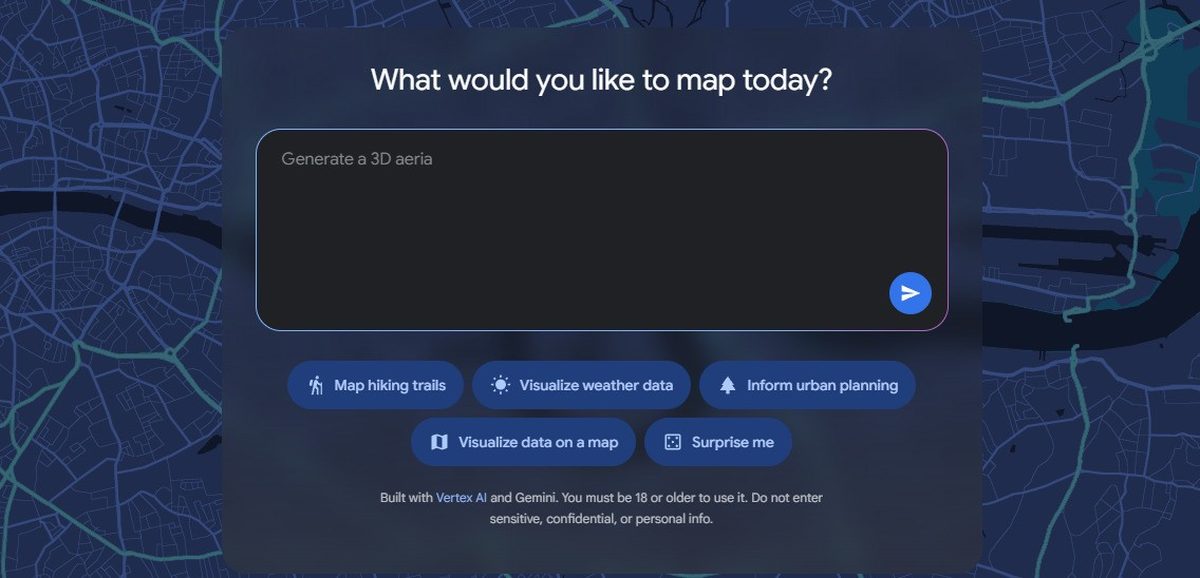

The new developer features include a “builder agent,” a tool that allows users to create map-based prototypes using natural language prompts. For example, a developer can type “create a Street View tour of a city” or “list pet-friendly hotels in the city,” and the agent will generate the corresponding code. This code can then be exported or modified in Firebase Studio. A “styling agent” is also included to help users customize a map’s theme and color scheme for branding.

Google is also introducing “Grounding Lite,” a new feature that allows developers to ground their own AI models with Google Maps data. It uses the Model Context Protocol (MCP), a standard that lets AI assistants connect to external data sources. This enables AI assistants to accurately answer location-based questions like, “How far is the nearest grocery store?” To provide visual answers for such queries, Google is shipping “Contextual View,” a low-code component that can display information as a list, a map, or a 3D display.

Additionally, Google is releasing a code assistant toolkit called the “MCP server.” This tool connects an AI assistant directly to Google Maps’ technical documentation, allowing developers to get answers and code help for using the Maps API.

These new developer tools follow other recent AI integrations for Maps, including the rollout of hands-free Gemini navigation for consumers and new incident alerts for users in India.