Google’s new AI could predict disasters before they hit

Google is leveraging generative AI and multiple foundation models to introduce Geospatial Reasoning, a research initiative designed to accelerate geospatial problem-solving. This effort integrates large language models like Gemini and remote sensing foundation models to enhance data analysis across various sectors.

For years, Google has compiled geospatial data, which is information tied to specific geographic locations, to enhance their products. This data is crucial for addressing enterprise challenges such as those in public health, urban development, and climate resilience.

The new remote sensing foundation models are built upon architectures like masked autoencoders, SigLIP, MaMMUT, and OWL-ViT, and are trained using high-resolution satellite and aerial images with text descriptions and bounding box annotations. These models generate detailed embeddings for images and objects, and can be customized for tasks such as mapping infrastructure, assessing disaster damage, and locating specific features.

These models support natural language interfaces, enabling users to perform tasks like finding images of specific structures or identifying impassable roads. Evaluations have demonstrated state-of-the-art performance across various remote sensing benchmarks.

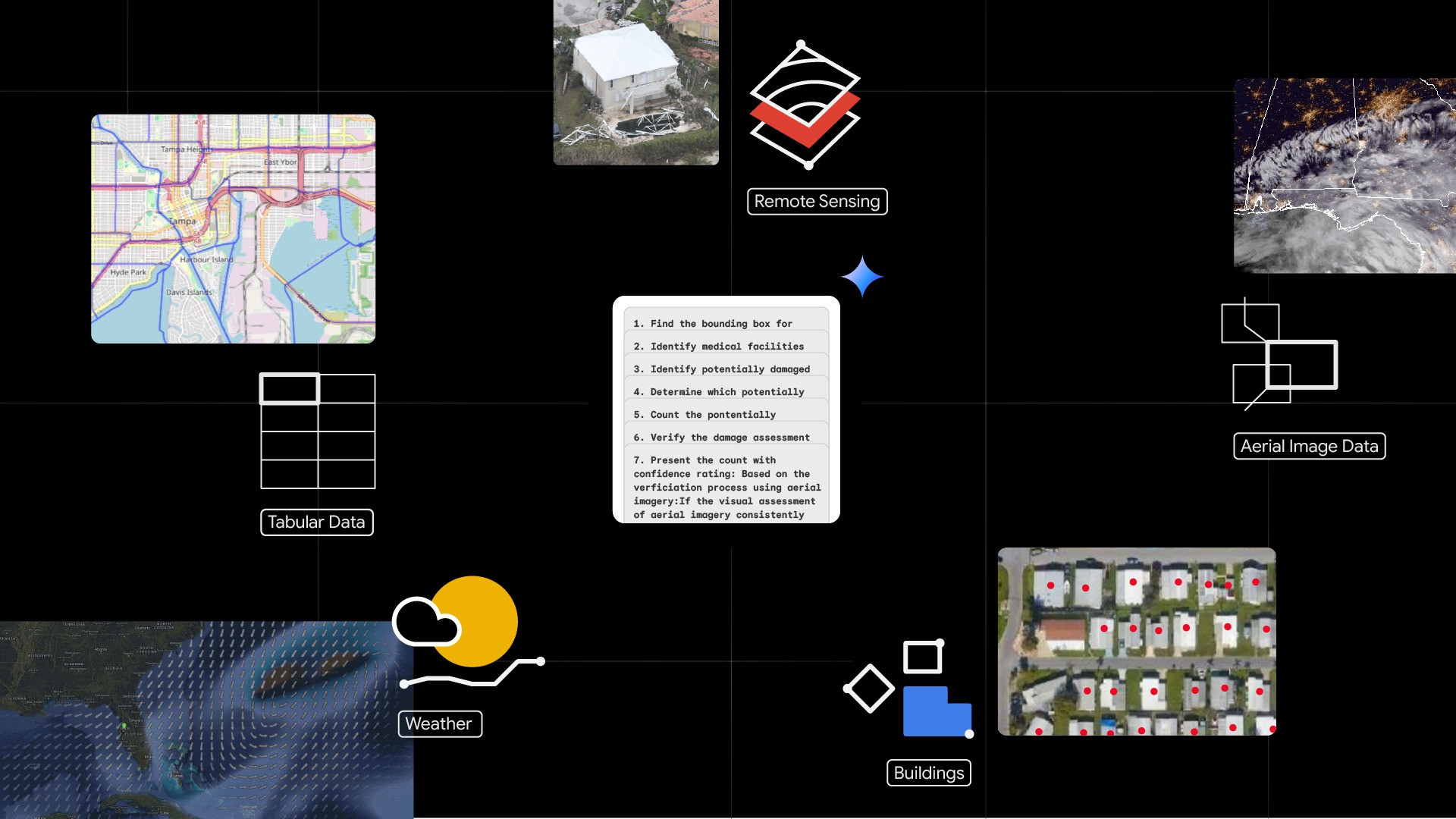

Geospatial Reasoning aims to integrate Google’s advanced foundation models with user-specific models and datasets, building on the existing pilot of Gemini capabilities in Google Earth. This framework allows developers to construct custom workflows on Google Cloud Platform to manage complex geospatial queries using Gemini, which orchestrates analysis across various data sources.

The demonstration application shows how a crisis manager can use Geospatial Reasoning after a hurricane by:

- Visualizing pre-disaster context: Using Earth Engine’s open-source satellite imagery.

- Visualizing post-disaster situation: Importing high-resolution aerial imagery.

- Identifying damaged areas: Using remote sensing foundation models to analyze aerial images.

- Predicting further risk: Utilizing the WeatherNext AI weather forecast.

- Asking Gemini questions: Estimating damage fractions, property damage value, and suggesting relief prioritization.

The demonstration application includes:

- A packaged Python front-end application: That integrates mapping and graphing components with a chat window.

- An agentic back-end: That implements a LangGraph agent deployed using Vertex AI Agent Engine.

- llm-accessible tools: For accessing Earth Engine, BigQuery, Google Maps Platform, and Google Cloud Storage, performing geospatial operations, and using remote sensing foundation model inference endpoints deployed on Vertex AI.

The application uses high-resolution aerial images from the Civil Air Patrol, pre-processed with AI from Bellwether, X’s moonshot for Climate Adaptation, plus Google Research’s Open Buildings and SKAI models. Social vulnerability indices, housing price data, and Google WeatherNext insights are also incorporated.

WPP’s Choreograph will integrate PDFM with its media performance data to enhance AI-driven audience intelligence. Airbus, Maxar, and Planet Labs will be the initial testers of the remote sensing foundation models.

Specifically:

- Airbus: Plans to use Google’s remote sensing foundation models to enable users to extract insights from billions of satellite images.

- Maxar: Intends to utilize the models to help customers interact with its “living globe” and extract mission-critical answers faster.

- Planet Labs: Will use the remote sensing foundation models to simplify and accelerate insights for their customers.