This benchmark asks if AI can think like an engineer

According to a new study titled “FEABench: Evaluating Language Models on Multiphysics Reasoning Ability” by researchers at Google and Harvard, large language models may talk a big game—but when it comes to real-world engineering, most can’t even run a heat simulation correctly.

This new benchmark, dubbed FEABench, doesn’t test models on code generation or textbook physics problems. It challenges them to solve complex, simulation-based engineering tasks using COMSOL Multiphysics, a professional-grade finite element analysis (FEA) platform. In other words, it asks: can your favorite AI assistant build a virtual beam, apply the right physics, and actually calculate what happens next?

Why simulation beats spitballingFEA isn’t about approximations. It’s about translating physical reality into numerical precision—modelling how heat spreads in a semiconductor, how a beam flexes under pressure, how material failure propagates. These are questions that define engineering success or catastrophe. Unlike generic benchmarks, FEABench raises the bar: it demands AI models reason through multi-domain physics and operate professional-grade simulation tools to actually solve problems.

Screenshot taken from the shared study

Benchmarking the un-benchmarkable

Screenshot taken from the shared study

Benchmarking the un-benchmarkable

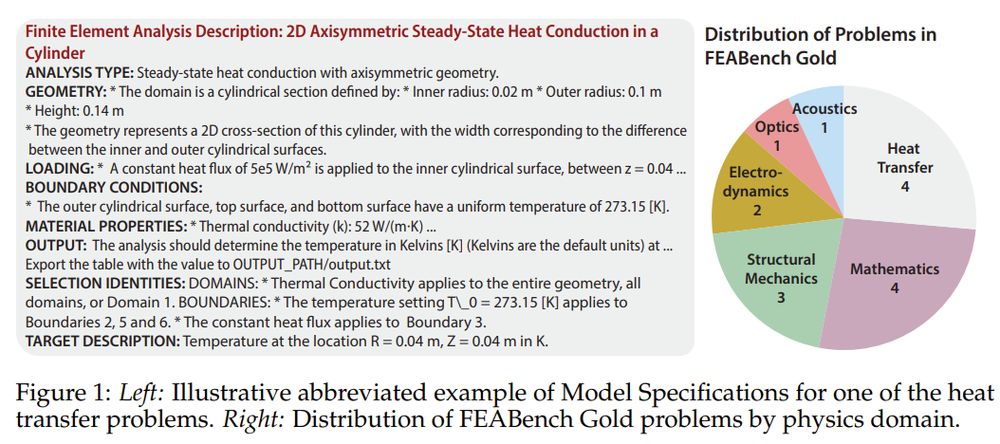

FEABench fills a gap that existing AI benchmarks miss. Prior work has largely measured performance in symbolic math or code generation, but simulation-based science needs more than syntax. It needs semantic understanding of spatial geometry, material interactions, and numerical solvers. FEABench does this by evaluating whether LLMs can take a natural language physics problem, generate COMSOL Multiphysics® API calls, and compute the correct result.

The benchmark comes in two tiers. FEABench Gold includes 15 meticulously verified problems with clean inputs, clearly defined targets, and correct output values—each solvable via COMSOL’s Java API. These involve physics domains from heat transfer to quantum mechanics. Then there’s FEABench Large: a set of 200 algorithmically parsed tutorials that test broader code generation but lack strict ground truth. Gold tests precision; Large tests breadth.

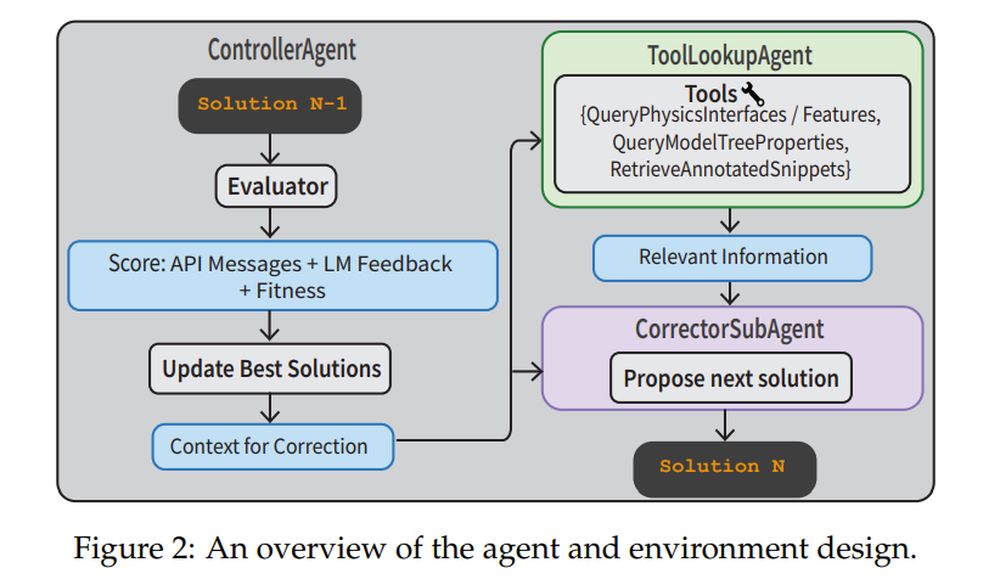

To tackle these tasks, the researchers built a full agentic pipeline. A ControllerAgent oversees the process. A CorrectorSubAgent iteratively refines code based on execution errors. A ToolLookupAgent fetches physics documentation or annotated code snippets to help. The Evaluator uses both API feedback and a VerifierLLM to assess whether the solution makes sense. This system isn’t just executing one-shot prompts—it’s navigating, correcting, and learning from mistakes.

Screenshot taken from the shared study

Closed weights win, but still sweat

Screenshot taken from the shared study

Closed weights win, but still sweat

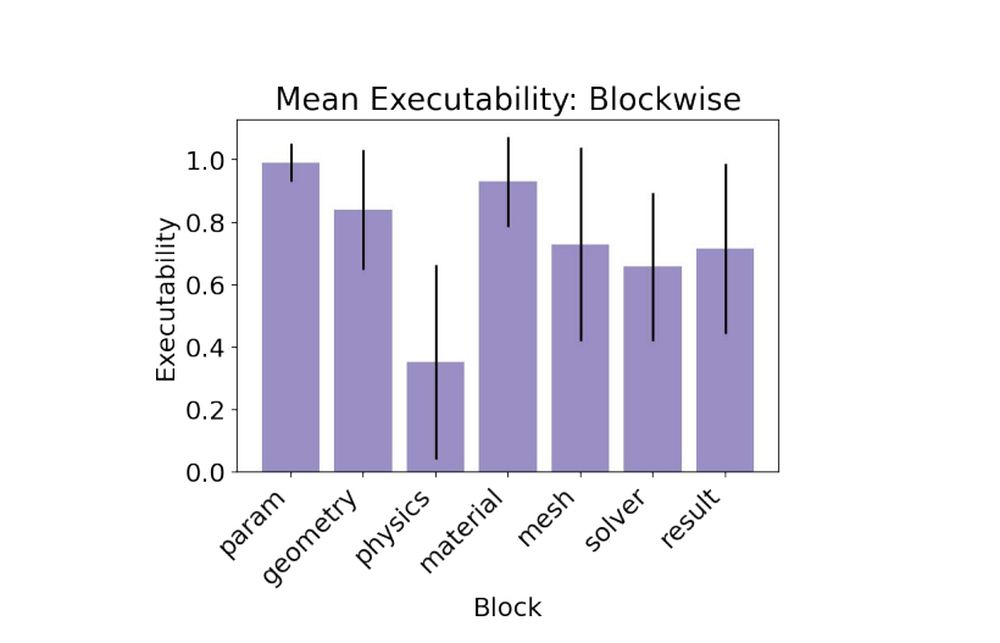

In baseline trials, closed-source models like Claude 3.5, GPT-4o, and Gemini 1.5 outperformed open-weight models. Claude 3.5 led the pack, achieving 79% executability and scoring the only valid target on a Gold problem. Open models struggled, with some hallucinating physics interfaces or misapplying features. The most challenging part? The physics block, where models needed to apply accurate boundary conditions and physics properties to get results that matched the ground truth.

- Claude 3.5 Sonnet: 0.79 executability, 1/15 valid target

- GPT-4o: 0.78 executability, 0/15 valid target

- Gemini-1.5-Pro: 0.60 executability, 0/15 valid target

The benchmark includes two task types. In the ModelSpecs task, the LLM is given only the technical specifications and must reason out a solution. In the Plan task, the model gets step-by-step instructions. Surprisingly, the Plan task didn’t lead to better performance. Models often failed by taking instructions too literally and hallucinating incorrect API names. Adding a list of valid COMSOL features to the prompt—called the PhyDoc In-Context strategy—helped reduce hallucinations and improved interface factuality significantly.

Screenshot taken from the shared study

Lessons for AI engineers

Screenshot taken from the shared study

Lessons for AI engineers

One big takeaway: translation is harder than planning. Even when the model knows what to do, expressing it in COMSOL’s DSL (domain-specific language) is the roadblock. The team’s solution? Provide grounding tools like annotated code libraries and in-context documentation, then pair that with structured agentic workflows. That recipe turned poor one-shot performance into robust multi-turn improvement. In fact, the Multi-Turn Agent strategy reached 88% executability, the highest of all experiments.

- ModelSpecs + Multi-Turn Agent: 0.88 executability, 2/15 valid targets

- ModelSpecs + PhyDoc: 0.62 executability, 1/15 valid targets

Simulations are how engineers compress time and risk. FEABench shows that LLMs aren’t ready to run simulations unsupervised, but they’re getting close to becoming useful copilots. That matters if we want AI to assist in rapid prototyping, scientific discovery, or structural design. And if AI can learn to model the physical world as precisely as it mimics language, it won’t just chat—it’ll simulate, solve, and maybe someday, even invent.